The Subjectivity of Computers

CMAP (Centre de Mathématiques APpliquées) UMR CNRS 7641, École polytechnique, Institut Polytechnique de Paris, CNRS, France

france telecom, France Telecom R&D

[Site Map, Help and Search [Plan du Site, Aide et Recherche]]

[The Y2K Bug [Le bug de l'an 2000]]

[Real Numbers don't exist in Computers and Floating Point Computations aren't safe. [Les Nombres Réels n'existent pas dans les Ordinateurs et les Calculs Flottants ne sont pas sûrs.]]

[Please, visit A Virtual Machine for Exploring Space-Time and Beyond, the place where you can find more than 10.000 pictures and animations between Art and Science]

(CMAP28 WWW site: this page was created on 07/11/1995 and last updated on 10/03/2024 17:20:39 -CEST-)

(published in Communications of the ACM, volume 36, number 8, 08/1993)

Abstract: Real numbers do not exist in a computer.

Ignoring this fact can lead to erroneous results when using floating point computations.

Keywords: Rounding-off Errors, Real Numbers, Floating Point Computations.

Contents:

Introduction:

- 1-the infinite Real number field is mapped on many different

finite floating-point number sets and these sets

are not them'selves fields (see for example the KAM theorem that says

important things about the consequences

of the approximation of Real numbers with rational numbers).

- 2-round off errors are not always negligible: when they occur, they

imply in particular the loss of following

properties: associativity of the multiplication and

distributivity of the multiplication over the addition;

mathematical equivalent formalisms do not give then equivalent programs when

implemented. Moreover, when changing

the compiler used (for example when updating the operating system of the computer used)

one may discover that old

programs recompiled do not give anymore the results expected (because of a different

ordering of the elementary

operations). The situation could become worse with the sophisticated new

microprocessors where very complex

scheduling algorithm are implemented...

- 3-the widespread usage of heterogeneous cooperations must take these facts into

account (where 'heterogeneous'

means "different hardwares" and/or "different compilers").

- 4-simple methods can be implemented to detect the sensitivity to round off errors

in complex existing programs

(for example in fluid dynamics).

- 5-most programs ask for reproductibility; when using dynamical systems (for random number

generation or cryptographic applications,...) it could become impossible

to have reproductibility, for example, when changing the computing environment.

1 - Sensitivity to the accuracy of numerical values:

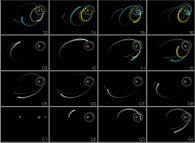

The Edward Lorenz's notion of "sensitivity to initial conditions" (see "The Lorenz attractor -sensitivity to initial conditions (displayed as the central point of each frame)-"

and [01]) must

be extended and given the more general name "sensitivity to the accuracy of numerical values".

1.1 - Rounded figure errors:

The internal accuracy of computers is limited (in general, numerical values are

represented over 32 or 64 bits, but, even

with a higher degree of accuracy, the phenomenon described here will still

occur, if slightly later, since the number

of decimals required for this type of process generally increases exponentially

with the number of iterations). During

each multiplication, figures are lost; this

implies that the results obtained will therefore depend on the order of the

operations, and the usual associative

property of multiplication, for example,

will no longer exist. The way in which the computer program is written

(particularly with regard to parentheses) and the compiler used (and its options)

will play an important role in the value of the results obtained

(see the paragraph 1.2).

Moreover, not all computers represent and handle figures in the same

way. In these circumstances, two strictly identical calculations carried out on

different machines could give different results. Is it therefore necessary to

introduce the notion of computer subjectivity or the one of numerical relativity?

This phenomenon may be observed by studying the Lorenz attractor

(see " The Lorenz attractor").

The same program is executed on three different machines: an IBM RS6000

(the "Red" computer), an

IBM ES9000 (the "Green" computer) and finally a Silicon Graphics INDIGO XS24

(the "Blue" computer). Three different

numerical integration methods were tested:

Euler of the first order,

Runge-Kutta of the second order and

Runge-Kutta of the fourth order; the

three of them, of course, react in the

same way. It should be mentioned that,

paradoxically, in these circumstances the

high-order methods (the most stable and most accurate methods, mathematically

speaking) are likely to be less "efficient" in terms of numerical accuracy since they

carry out a much higher number of multiplications than low-order

methods... It is also worth noting that errors will occur whatever the method

used. The phenomenon highlighted here is the discrepancy between several

computers in relation to the same program, regardless of whether the

program is good or not (in terms of certain arbitrary criteria).

As one would expect, at the beginning of the calculations (up to

approximately iteration 4700), the three machines give the same results (errors

were too small to be visible on the

images). Then, gradually, the results

begin to differ but only very slightly. However, at approximately iteration

4980, the ES9000 suddenly separates

from the RS6000 and INDIGO. Finally,

at approximately iteration 5060, the RS6000 and INDIGO diverged (see

"Computation of the Lorenz attractor on three different computers (the Red one, the Green one and the Blue one: sensitivity to rounding-off errors)" with

(x0,y0,z0) = (0.01,0.01,0.01)

and Dt = 0.01).

Other computers were also used during these experiments (Alliant

FX2800, Dec VAX 9000, Silicon

Graphics 4D20, Sony NWS 3260). With

the Alliant FX2800, sensitivity to initial

conditions complicated the analysis of the situation still further; its 'scc' compiler

did not set some constants with their exact values.

It should be stressed that two points with different "colors", which look

close together, generally correspond to

totally different instants, except in the

first iterations.

Thus, for any instant which is sufficiently distant from the

temporal origin of the computation, the forecasts carried out are in complete

contradiction to each other, even if the

form of the attractor is retained [04]. This

is not at all satisfactory, as our aim here

is to make forecasts with regard to the development of the system based on

fixed initial conditions. So which machine is right? Unfortunately none of

them is, and even if we found for N computers which all provided the same

result, it would obviously not be the correct one.

Finally, these computations are sensitive to the integration method

used (see "The Lorenz attractor -sensitivity to integration methods used (Red=Euler, Green=Runge-Kutta/2nd order, Blue=Runge-Kutta/4th order)-").

(more visualizations)

1.2 - Computer-based formulation of the model:

the

Verhulst dynamics [02], models the

variation of a population of individuals by the following iteration:

2

X = (R+1)X - RX

n n-1 n-1

('R' denotes the growing rate,

where R > 2.57, it shows so-called "chaotic" behaviour).

Remember that, in this case,

multiplication is no more associative, the

above iteration in fact shows five families of formulation (as far as numerical

results are concerned, any other possible form gives the same result as one of

these five forms). As the following tables

indicate, each of them, functioning under

the same conditions (C program

in double precision, where R = 3.0) gives

five sets of results which are totally

incompatible, after a very small number of iterations:

IBM ES9000:

(R+1)X-R(XX) (R+1)X-(RX)X ((R+1)-(RX))X RX+(1-(RX))X X+R(X-(XX))

X(00) = 0.500000 0.500000 0.500000 0.500000 0.500000

X(10) = 0.384631 0.384631 0.384631 0.384631 0.384631

X(20) = 0.418895 0.418895 0.418895 0.418895 0.418895

X(30) = 0.046399 0.046399 0.046399 0.046399 0.046399

X(40) = 0.320185 0.320183 0.320188 0.320182 0.320189

X(50) = 0.063406 0.064521 0.061895 0.064941 0.061244

X(60) = 1.040381 0.846041 0.529794 1.319900 1.214070

X(70) = 0.004104 1.199452 0.873553 0.573637 0.000009

X(80) = 0.108044 0.121414 1.260726 0.395871 0.280590

X(90) = 0.096374 0.089244 0.582157 0.344503 1.023735

IBM RS6000:

(R+1)X-R(XX) (R+1)X-(RX)X ((R+1)-(RX))X RX+(1-(RX))X X+R(X-(XX))

X(00) = 0.500000 0.500000 0.500000 0.500000 0.500000

X(10) = 0.384631 0.384631 0.384631 0.384631 0.384631

X(20) = 0.418895 0.418895 0.418895 0.418895 0.418895

X(30) = 0.046399 0.046399 0.046399 0.046399 0.046399

X(40) = 0.320177 0.320184 0.320188 0.320190 0.320189

X(50) = 0.067567 0.063747 0.061859 0.060822 0.061486

X(60) = 0.001145 0.271115 0.616781 0.298613 1.307350

X(70) = 1.296775 1.328462 0.486629 0.938605 1.054669

X(80) = 0.553038 0.817163 1.277151 1.325437 0.617058

X(90) = 0.094852 0.154184 1.174162 0.148151 0.237355

None of the columns below is correct and the same would apply if there

were only one column... It is worth noting that the same program written in

Fortran produces the same behaviour pattern. Finally, it should be noted that

the optional optimization of a generated code will also affect the results.

For example, the following iteration:

X = (R+1)*X - R*X*X;

compiled and executed on a Silicon Graphics Power-Challenge M

(IRIX release 6.2 and C release 7.0)

gives two incompatible sets of results using two different

optimization options:

Power-Challenge M Silicon Graphics (R8000, IRIX 6.2, cc 7.0):

option '-O2' option '-O3'

X(00) = 0.500000 0.500000

X(10) = 0.384631 0.384631

X(20) = 0.418895 0.418895

X(30) = 0.046399 0.046399

X(40) = 0.320184 0.320188

X(50) = 0.063747 0.061859

X(60) = 0.271115 0.616781

X(70) = 1.328462 0.486629

X(80) = 0.817163 1.277151

(it is noteworthy that to exhibit this phenomenon,

redondant parentheses must be avoided).

Figure "N-body problem integration (N=4: one star, one heavy planet and one light planet with a satellite) computed with 2 different optimization options on the same computer (sensitivity to rounding-off errors)" gives

another example of this phenomenon.

This leads us to an obvious consequence: sometimes, it will

be impossible (or risky) to implement a numerical method due to the fact

that compilers play a role we cannot neither neglect nor master.

To use more digits for computations does not seem to be the solution.

Experiments were made using bc

("arbitrary-precision arithmetic language").

The following table gives the iteration number n

where a wrong integer part appears according to the number of decimal

digits used for the computation (scale parameter):

scale = 010 020 030 040 050 060 070 080 090 100

n = 035 070 105 136 169 199 230 265 303 335

The gain obtained cannot justify the cost...

1.3 - Heterogeneous parallelism:

the tools available today enable us to establish computation

methods based on heterogeneous parallelism. For models which show the

chaotic behaviour described above, two types of "malfunction" can be expected:

- problems linked to rounding errors that are indigenous to each

machine (i.e. the errors listed below),

- problems of rounding errors

incoherence during cooperations of machines consisting of different

hardwares and compilers.

In order to illustrate this last point,

let us take the example of the Lorenz attractor. Even though, for the present

case in point, this is of little interest from

the point of view of performance, let us

suppose that instead of calculating the

vector (xn,yx,zn) three times on three

different machines, we calculate xn on

the first one, yn on the second, and zn on

the third (with the value calculated on one machine downloaded in the two others).

Errors made on each of the three coordinates could be of different "types"

; the trajectory which is then calculated for this system would again be different

from the three obtained previously. Finally, within this context and for

obvious reasons, this type of computation cannot be started on one

machine and finished on another (e.g. in the case of a breakdown) without taking

the precautions suggested by the method described

in paragraph 2 below.

An interesting experiment has already been done: two machines are used to compute

a rotation of the Lorenz attractor about its X axis. Here are the animations obtained

with 1000 iterations "Rotation about the X axis of the Lorenz attractor (1000 iterations), computed simultaneously on two different computers"

and 5000 iterations "Rotation about the X axis of the Lorenz attractor (5000 iterations), computed simultaneously on two different computers".

The first one (with a few number of iterations) does

not exhibit numerical anomalies (the discrepancies are much more smaller than the

pixel size), when the second one (with

5 times more iterations) is absolutely incoherent...

2 - Elementary method of detecting sensitivity to the accuracy of numerical values by re-sequencing arithmetic expressions:

in order to quickly highlight the effect of the way in which a problem is formulated and indicate

arithmetical incompatabilities between different machines (computers and

compilers), we propose to use the Verhulst dynamics study program (C or

Fortran, double precision). It is very short and can be input into any system in

a few seconds. Two stages are required:

Stage One:

by executing the

Verhulst dynamics study program, this stage indicates whether the proposed

method is applicable and provides the following information:

- if, at a fixed iteration number, the

five columns of values are different on a

given machine, it is because the compiler

used bases the generated code on the computer formulation

of the problem.

- if, by carrying out the same

calculation on two different machines,

the two sets of results differ, it is either

because the two arithmetical units do not

operate in a similar way , or because the

two compilers analyse the expressions in

a different way (these two conditions

may exist simultaneously).

Stage Two:

if the results of the first stage are positive (i.e. if the

compiler used on a given machine bases the generated code on the format of the

programmed expressions), a very simple method of detecting program sensitivity

to the accuracy of computation (and also,

of course, to the accuracy of initial conditions) may be implemented. It

exploits the fact that, under these

circumstances, the usual properties of multiplication no longer apply

(associative law and distributive law as compared to addition). The method requires:

- locating the "sensitive" areas in the

program (i.e. the code sequences,

usually very localised, in which the

iterative and non-linear processes are implemented),

- re-writing these sequences in two or three different ways (e.g. by

re-sequencing multiplications with several factors or implementing the

distributive law of multiplication as compared to addition),

- executing each version of the program with the same parameters and

initial conditions, and comparing the results obtained.

This method, does not affect the performance of the program or increases

its memory requirements.

3 - Contribution of K language with regard to the test for problems subject to the accuracy of numerical values:

we have defined a portable programming and co-operation environment for use within a

heterogeneous environment. It includes a prefixed language known as K, which

facilitates the test described above. Let us take the example of a three-factor

multiplication ('A','B' and 'C' representing any three expressions):

AxBxC

In K, it is generally written and defined as follows:

MUL3(A,B,C) ==> MUL2(A,MUL2(B,C))

In the same way the general expression:

Ax(B + C)

is written and defined by:

DIS2(A,B,C) ==> MUL2(A,ADD2(B,C))

('ADD2(,)' and 'MUL2(,)' are representing respectively the addition and

multiplication of any two expressions). As the proposed K language can be

redefined at compile time, it is possible,

in this case for example, to change the definition of 'MUL3(A,B,C)' in a

specific area of the program so that it becomes:

MUL2(B,MUL2(C,A))

In the same way, 'DIS2(A,B,C)' may be redefined as:

ADD2(MUL2(A,B),MUL2(A,C))

Thus without having to reprogram certain areas (and run the risk of incorrect

problem reformulation), it is possible with K to produce a different generated

code automatically, without having to change the program itself; this offers the

twofold advantage of safety and rapidity. Note that this could be incorporated into

existing compilers relatively easily. During expression analysis, an option

would enable the operator to select a "jumbling" of the sequence of

operations, in accordance with one or more of the rewriting procedures

described below (precautions may need to be taken when optimising the generated code).

4 - Conclusion:

It is highly probable that models which are much more complex than those used

below for demonstration purposes, in particular the ones used for

the study of the Solar System stability (see "N-body problem integration (N=4: one star, one heavy planet and one light planet with a satellite) computed on three different computers (the Red one, the Green one and the Blue one: sensitivity to rounding-off errors)") or for long-term

weather forecasting (whose sensitivity to initial conditions is widely accepted),

depend on the computers and program syntax which enable them to be studied!

It is therefore essential to detect this behaviour and be aware of the "limits" of

such models. Unfortunately, the very simple method proposed here, or even

the method proposed, for example, by

Jean Vignes and Françoise Chatelin [03],

only serve to warn the user of the risks involved, but in no way do they provide

a correct result. Theoretical results have already been expounded [04].

Unfortunately, they only study a few specific systems (e.g. Henon attractor)

and show that, over a finite period of

time, there is always a real orbit close to

a "noisy" orbit. However, this advantage is provided at the cost of a change in the

initial conditions, thus inhibiting the study of the development of a system

with given initial conditions.

Today, when both fundamental and

applied research relies so heavily on computers, it is essential that users

(engineers, scientists and students) should be perfectly aware of the dangers,

and that efforts should be made to find real solutions to this very real problem, if

such solutions exist.

(more visualizations)

- [01]

"Chaos and Fractals, New Frontiers of

Science", Hans O. Peitgen, Peter H. Richter,

Saupe, Springer Verlag, 1992.

- [02]

"The beauty of fractals", Hans O.

Peitgen, Peter H. Richter, Springer Verlag,

1986.

- [03]

"Les Erreurs traquées", Françoise

Chatelin, Jean Vignes, Pour La Science, numero

131, Septembre 1988.

- [04]

"Numerical orbits of chaotic processes

represent true orbits", Stephen M. Hammel,

James A. Yorke, Celso Grebogi, Bulletin of the

American Mathematical Society, Volume 19,

Number 2, October 1998.

Copyright © Jean-François COLONNA, 1995-2024.

Copyright © France Telecom R&D and CMAP (Centre de Mathématiques APpliquées) UMR CNRS 7641 / École polytechnique, Institut Polytechnique de Paris, 1995-2024.